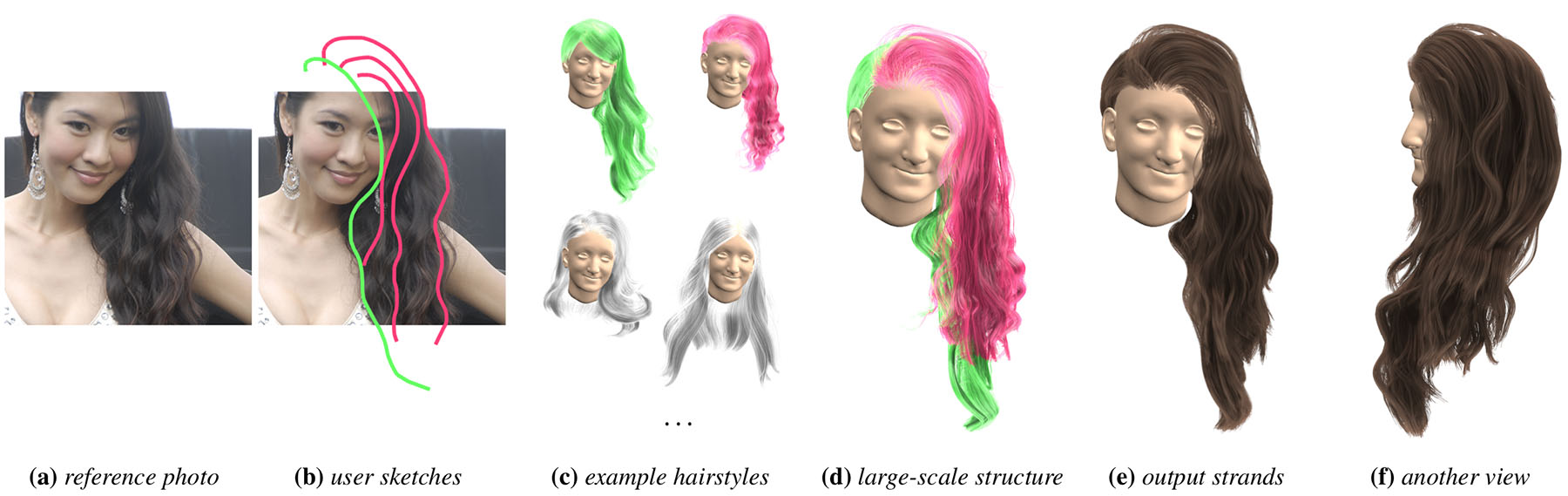

Our system takes as input a reference photo (a), a few user strokes (b) and a database of example hairstyles (c) to create the 3D target hairstyle (e). The retrieved examples that best match the user strokes are highlighted with the corresponding colors in (c), which are consistently combined together via our method as shown in (d).

Hair digitalization has been one of the most critical and challenging tasks necessary to create virtual avatars. Most existing hair modeling techniques require either expensive capture devices or tedious manual effort. In this paper, we present a data-driven approach to create a complex 3D hairstyle from the single view of a photograph. We first build a database of 343 manually created 3D example hairstyles from some online repositories. Given a reference photo of the target hairstyle and a few user strokes as guidance, we automatically search for several best matching examples from the database and consistently combine them into a single hairstyle as the large-scale structure of the modeling output. Then we synthesize the final strands by ensuring the projected 2D similarity with the reference photo, the 3D physical plausibility of each individual strand as well as the local orientational coherency between neighboring strands simultaneously. We demonstrate the effectiveness and robustness of our method through a variety of hairstyles and compare with state-of-the-art hair modeling algorithms.