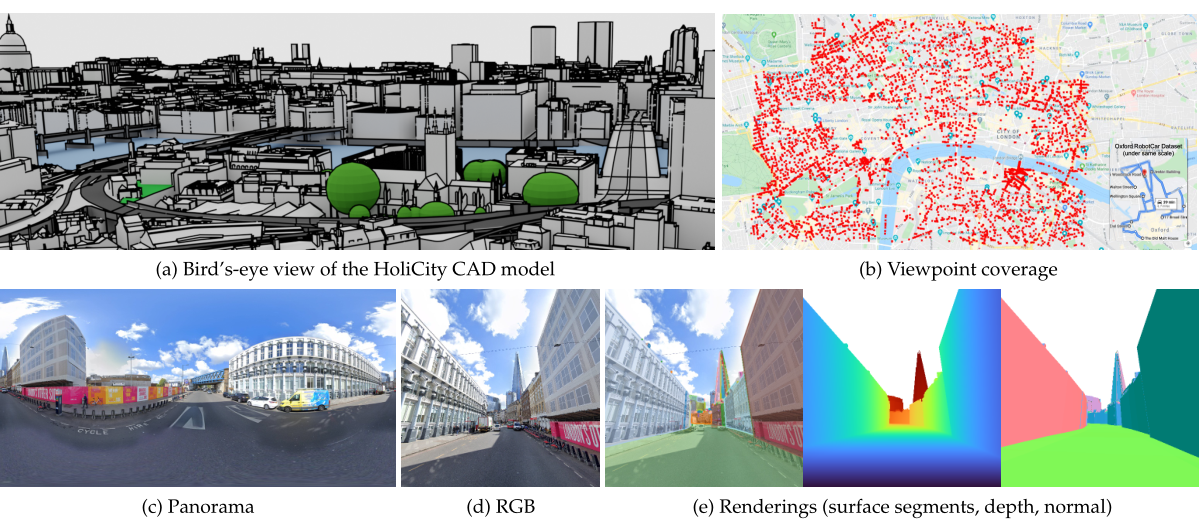

Our HoliCity dataset consists of accurate city-scale CAD models and spatially-registered street view panoramas. HoliCity covers an area of more than 20 km2 in London from 6,300 viewpoints, which dwarfs previous datasets such as Oxford RobotCar [37] (1b). From the CAD models (1a) and the panoramas (1c), it is possible to generate all kinds of clean structured ground-truths for 3D scene understanding tasks, including perspective RGB images (1d), depth maps, plane maps, and normal maps (1e).

Our HoliCity dataset consists of accurate city-scale CAD models and spatially-registered street view panoramas. HoliCity covers an area of more than 20 km2 in London from 6,300 viewpoints, which dwarfs previous datasets such as Oxford RobotCar [37] (1b). From the CAD models (1a) and the panoramas (1c), it is possible to generate all kinds of clean structured ground-truths for 3D scene understanding tasks, including perspective RGB images (1d), depth maps, plane maps, and normal maps (1e).

We present HoliCity, a city-scale 3D dataset with rich structural information. Currently, this dataset has 6,300 real-world panoramas of resolution 13312 × 6656 that are accurately aligned with the CAD model of downtown London with an area of more than 20 km2, in which the median reprojection error of the alignment of an average image is less than half a degree. This dataset aims to be an all-in- one data platform for research of learning abstracted high-level holistic 3D structures that can be derived from city CAD models, e.g., corners, lines, wireframes, planes, and cuboids, with the ultimate goal of supporting real-world applications including city-scale reconstruction, localization, mapping, and augmented reality. The accurate alignment of the 3D CAD models and panoramas also benefits low-level 3D vision tasks such as surface normal estimation, as the surface normal extracted from previous LiDAR-based datasets is often noisy. We conduct experiments to demonstrate the applications of HoliCity, such as predicting surface segmentation, normal maps, depth maps, and vanishing points, as well as test the generalizability of methods trained on HoliCity and other related datasets. HoliCity is available at https://holicity.io.